Blog

DORA compliance sets the first European standard that requires financial institutions to track their digital ICT risks. The regulation took effect on January 16, 2023 and gives financial firms until January 17, 2025, to meet all requirements.

DORA compliance sets the first European standard that requires financial institutions to track their digital ICT risks. The regulation took effect on January 16, 2023 and gives financial firms until January 17, 2025, to meet all requirements.

The Digital Operational Resilience Act (DORA) impacts more than just traditional financial institutions. Banks, insurance companies and critical ICT service providers that support the financial sector must comply. DORA strengthens operational resilience throughout the EU financial sector with five key elements: ICT Risk Management Framework, Incident Response Process, Security Testing, Third Party Risk mapping, and Threat Intelligence Sharing. Each EU member state used to have different regulations, but DORA creates a single binding framework for all European financial entities.

Let us break down what DORA requires and share practical ways to comply. You'll learn everything you need to meet the 2025 deadline successfully.

Understanding DORA Compliance Requirements in 2025

The EU has taken bold steps to fight growing digital threats by creating detailed legislation that changes how financial institutions handle ICT risks. Let's get into what makes this groundbreaking regulation so important.

What is the Digital Operational Resilience Act?

The Digital Operational Resilience Act (DORA) brings a unified legal framework that deepens the EU financial sector's commitment to digital operational resilience. DORA, officially known as Regulation (EU) 2022/2554, came into effect on January 16, 2023. Unlike scattered regulations before it, DORA helps financial institutions in the European Union align their ICT risk management practices.

DORA fills a vital gap in previous EU financial regulations. Financial entities used to manage operational risks by setting aside capital for potential losses. This approach fell short because it didn't cover everything about operational resilience, especially ICT risks.

Over 22,000 financial entities in the EU must follow DORA. The regulation covers 20 different types of financial organizations. It reaches beyond traditional banks to include crypto-asset providers, fund managers, crowdfunding platforms, and even critical ICT third-party service providers that support the financial ecosystem.

Key objectives of DORA regulation

The main goal of DORA ensures banks, insurance companies, investment firms, and other financial entities can handle, respond to, and bounce back from ICT disruptions like cyberattacks or system failures. DORA builds on five key pillars:

- ICT Risk Management: We moved from reactive to proactive risk management through regular assessments, evaluation practices, mitigation strategies, incident response plans, and risk awareness initiatives

- Incident Reporting: The EU now has standard processes to monitor, detect, analyze, and report significant ICT-related incidents

- Digital Operational Resilience Testing: Financial institutions must prove they can withstand cyber threats through regular vulnerability assessments and response testing

- Third-Party Risk Management: Organizations must keep closer watch on their critical ICT service providers through detailed contracts and ongoing due diligence

- Information Sharing: The sector learns from shared experiences and lessons to improve operational resilience

DORA brings together previously scattered requirements. The organization's management body—including boards, executive leaders, and senior stakeholders—now has direct responsibility for ICT management. They must create appropriate risk-management frameworks, help execute and oversee these strategies, and stay up to date with evolving ICT risks.

January 17, 2025: The critical compliance deadline

European financial entities must comply with DORA by January 17, 2025. National competent authorities and European Supervisory Authorities (ESAs) will start their supervision on this date. These include the European Banking Authority (EBA), European Securities and Markets Authority (ESMA), and European Insurance and Occupational Pensions Authority (EIOPA).

Financial entities need their Registers of Information (RoI) ready by January 1, 2025. These registers must include detailed information about arrangements with ICT third-party service providers. The registers serve three purposes:

- They help financial entities track ICT third-party risk

- EU competent authorities use them to supervise risk management

- ESAs refer to them when designating critical ICT third-party service providers

The first submission of these registers to ESAs must happen by April 30, 2025. National supervisory authorities will gather this information from financial entities before this date.

Major ICT incidents need quick reporting under DORA. After an incident becomes "major," financial entities must send an initial notice within 4 hours. They follow up with an intermediate report within 72 hours and wrap up with a final report within a month.

DORA violations come with heavy penalties. European Supervisory Authorities can impose fines up to 2% of total annual worldwide turnover for organizations or up to €1,000,000 for individuals.

Financial entities must move quickly to assess gaps, update policies, review third-party contracts, and set up strong ICT risk management frameworks before January 2025 arrives.

Who Must Comply with DORA Regulations?

DORA's regulatory authority goes well beyond previous EU financial regulations. Financial organizations must know if they need to comply with DORA rules before January 2025.

Financial entities within scope

DORA rules apply to many financial sector participants in the European Union, with 20 different categories under its umbrella. The complete list has:

- Credit institutions and banks

- Payment institutions (including those exempt under Directive 2015/2366)

- Account information service providers

- Electronic money institutions

- Investment firms

- Crypto-asset service providers and issuers of asset-referenced tokens

- Central securities depositories

- Central counterparties

- Trading venues and trade repositories

- Alternative investment fund managers

- Management companies

- Data reporting service providers

- Insurance and reinsurance undertakings

- Insurance intermediaries and brokers

- Occupational retirement institutions

- Credit rating agencies

- Critical benchmark administrators

- Crowdfunding service providers

- Securitization repositories

DORA affects more than 22,000 financial entities that operate in the EU. Financial organizations without EU offices might still need to comply if they offer cross-border services or have supply chains linked to Europe.

ICT service providers and third parties

DORA creates new rules for Information and Communication Technology (ICT) third-party service providers. These companies provide digital and data services through ICT systems to users continuously.

ICT service providers face extra oversight when they support critical functions of financial entities. DORA sets up a new framework to watch over critical ICT third-party service providers (CTPPs).

The process to label an ICT provider as "critical" follows two steps:

- Quantitative assessment: Looks at market share (providers whose customers make up at least 10% of a financial entity category) and systemic importance

- Qualitative assessment: Checks impact intensity, service criticality, and how easily services can be replaced

Each CTPP gets one European Supervisory Authority as its "Lead Overseer" to manage risks. Non-critical providers must also follow DORA rules to keep serving their financial clients.

Proportionality principle: Requirements based on size and complexity

DORA's proportionality principle recognizes that identical rules won't work for every organization in the diverse financial world.

This principle makes financial entities follow DORA rules based on their:

- Size and overall risk profile

- Nature, scale and complexity of services

- Activities and operations

Every organization in scope must comply, but requirements vary. Small companies with fewer than 10 employees and yearly turnover under €2 million have simpler rules than large institutions. Small enterprises (10-49 employees) and medium enterprises (under 250 employees) also get adjusted compliance targets.

The proportionality principle shows up throughout DORA's framework in:

- ICT risk management implementation (Chapter II)

- Digital operational resilience testing (Chapter III)

- Third-party risk management (Chapter IV)

- Information sharing practices (Chapter V, Section I)

Authorities will check if organizations' ICT risk management matches their size and complexity. Small organizations still need to meet all requirements, just at a level that fits their size.

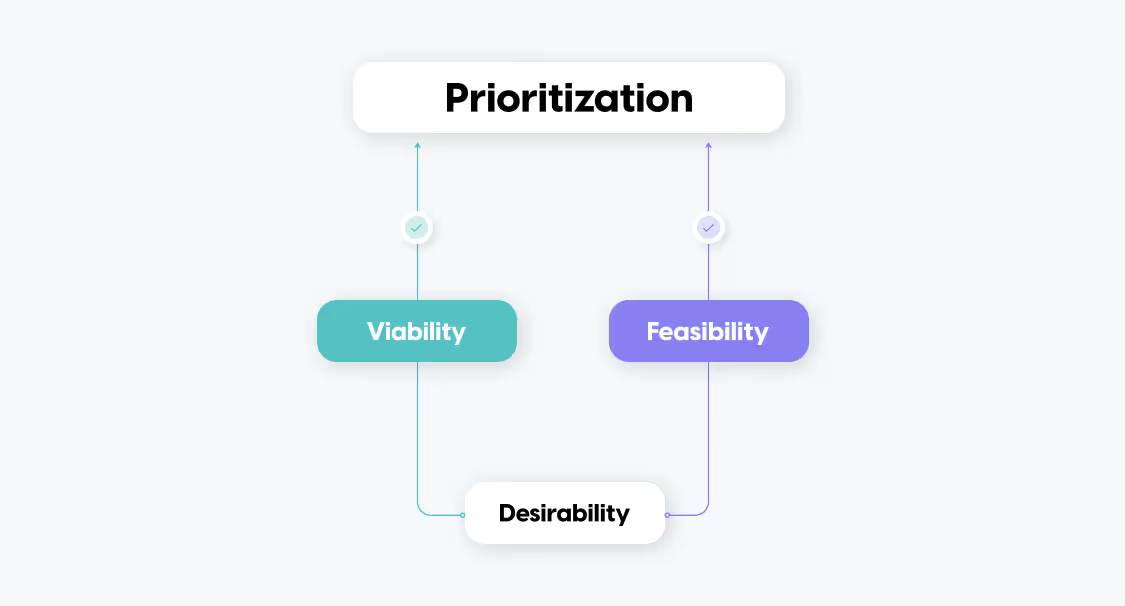

The 5 Core Pillars of DORA Compliance Framework

Europe needs a well-laid-out approach to digital operational resilience to boost financial stability. The Digital Operational Resilience Act lays out five main pillars that serve as the life-blood of any successful DORA compliance framework.

ICT risk management fundamentals

DORA compliance starts with changing ICT risk management from reactive to proactive approaches. This pillar requires financial entities to create a robust, complete, and documented ICT risk management framework as part of their overall risk management system.

The framework must include strategies, policies, procedures, ICT protocols and tools to protect all information assets and ICT systems. Financial entities need to give responsibility for managing and overseeing ICT risk to a control function that stays independent enough to avoid conflicts of interest.

Most organizations must review their framework yearly, while microenterprises can do it periodically. Teams should keep improving the framework based on what they learn from implementation and monitoring. The framework also needs a digital operational resilience strategy that shows how it supports business goals while setting clear information security targets.

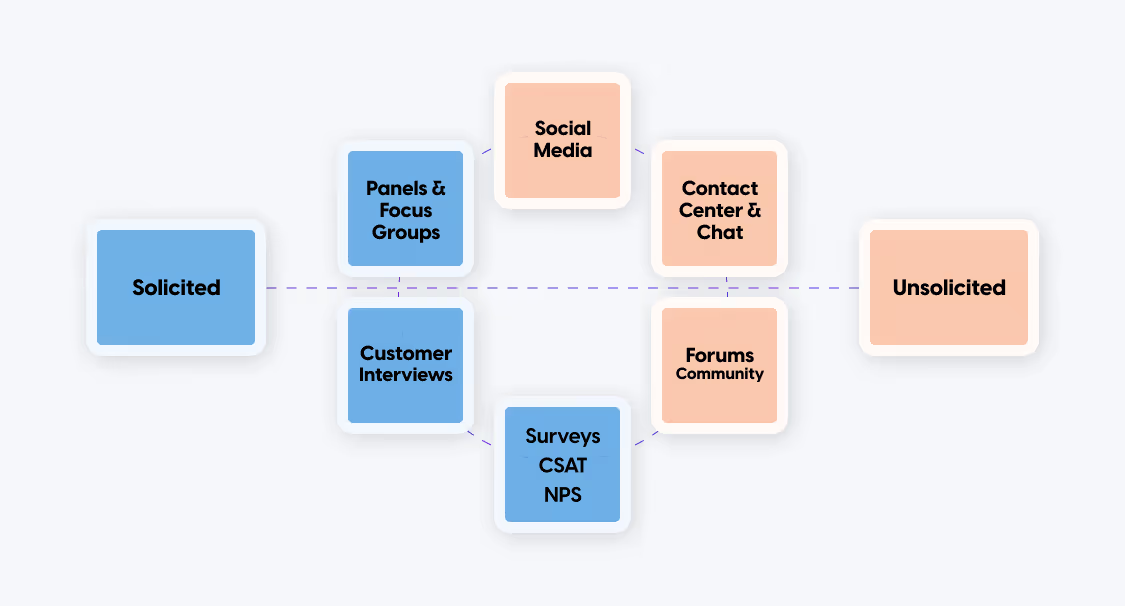

Incident reporting mechanisms

The second pillar aligns incident reporting across the financial sector through standard detection, classification, and reporting procedures. DORA makes these processes simpler and applies them to all financial entities.

Organizations must follow strict timelines. They need to submit their first notification within 4 hours after classifying an incident and 24 hours after detecting it. An intermediate report comes within 72 hours, followed by a final report within a month. Beyond reporting major ICT incidents, organizations can also voluntarily report serious cyber threats.

Financial entities must tell their clients quickly when major ICT-related incidents affect their financial interests. Even if they outsource reporting to third-party providers, the financial entity still holds full responsibility for meeting all requirements.

Digital operational resilience testing

The third pillar calls for a complete digital operational resilience testing program. These tests help assess how ready an organization is to handle ICT-related incidents and spot weaknesses, gaps, and security issues.

DORA requires simple testing for all financial entities. Selected entities under specific oversight must do advanced testing based on threat-led penetration testing (TLPT). Organizations run simulations and stress tests to check their cyber vulnerabilities and response capabilities, then use results to make their practices better.

This testing helps financial institutions stand up to various cyber threats. They can keep operating during disruptions and bounce back quickly from attacks.

Third-party risk management

The fourth pillar tackles dependencies on external technology providers. DORA sets up principle-based rules for managing third-party risks within the ICT risk management framework and key contract provisions for ICT service providers.

Financial entities must assess risks tied to ICT third-party providers really well. This includes looking at operational risks, concentration risks, and system-wide impacts. Risk management efforts should match how critical the services are.

Contracts need detailed sections on risk management to make providers accountable for reducing risks. Organizations should have backup plans for critical ICT services in case key providers become unavailable. They also need to create and update a list of all ICT third-party providers and services, including contract details, criticality checks, and risk reviews.

Information sharing practices

The last pillar supports voluntary sharing of cyber threat information among financial entities. This includes sharing details about compromise indicators, tactics, techniques, procedures, cybersecurity alerts, and configuration tools.

These exchanges happen in trusted financial entity communities to boost digital operational resilience. Information-sharing setups need clear rules for joining and must protect sensitive shared data while following business privacy and data protection laws.

Financial entities must let authorities know when they join these sharing arrangements. Working together helps organizations learn from each other's knowledge and experiences. This makes them better at spotting and handling digital challenges.

Building Your DORA Compliance Roadmap

The January 2025 DORA compliance deadline looms closer for financial firms. A well-laid-out roadmap will help prevent last-minute chaos and ensure your organization meets all requirements.

12-month implementation timeline

The DORA regulations take effect from January 17, 2025. Financial institutions must start their compliance trip now if they haven't already. Here's an effective 12-month plan with critical milestones:

Months 1-3 (Q2 2024): Complete the original DORA review, build your project team, and perform detailed gap analysis.

Months 4-6 (Q3 2024): Create remediation options, develop complete project plans, and secure approval from senior management.

Months 7-9 (Q4 2024): Make essential changes to ICT risk management frameworks, incident response procedures, and third-party management processes.

Months 10-12 (Q1 2025): Complete implementation, test thoroughly, and prepare for the January 17 deadline.

Your timeline should be flexible enough to include updates from the second batch of DORA standards finalized in July 2024. This step-by-step approach lets organizations address all requirements while keeping operations running smoothly.

Gap analysis methodology

A thorough gap analysis reveals your organization's current position against DORA requirements. Compliance experts suggest these steps:

- Build a detailed mapping matrix that compares your current policies with DORA requirements across all five pillars

- Use a RAG (Red-Amber-Green) status system to score your compliance level

- Spot specific areas where you don't comply fully or partially

- Check if your systems, processes, and risk management measures line up with DORA requirements

Gap analysis tools can make this process easier by customizing questions for your organization type. These assessments should look at your ICT risk management framework against DORA's five core pillars and highlight areas needing improvement.

Resource allocation and budgeting

DORA compliance needs careful resource planning. Your financial assessment should cover these cost areas:

Operational costs: Regular expenses for audits, security testing, and employee training

Infrastructure upgrades: Better cybersecurity systems and incident response capabilities

Technology assessment: Review of existing technologies against compliance needs

Third-party vendor assessments: Money for audits or certifications of service providers

Organizations should set aside budgets for technology upgrades, expert help, and staff training. DORA requirements affect multiple teams, so resources must reach cybersecurity, risk management, business continuity, and regulatory compliance departments.

Stakeholder engagement strategy

DORA compliance needs teamwork across your organization. Senior management must support the initiative from day one, though some groups struggled while standards were being finalized.

Here's how to get stakeholder support:

- Run workshops to teach business units about DORA's importance

- Make sure all departments agree on fixes

- Get senior leadership to commit necessary resources

- Set up clear roles and responsibilities through formal governance

DORA makes senior management and boards directly responsible for ICT risk governance. They need simple reporting tools and focused training on key requirements.

Track progress regularly and report to senior management. Flag problems quickly so they can be fixed, as many organizations face tight deadlines. This organized approach will help financial firms meet the critical January 2025 DORA compliance deadline successfully.

Essential DORA Compliance Checklist for Financial Firms

A detailed compliance checklist serves as the life-blood for financial institutions that need to navigate DORA requirements. This practical framework shows the documentation and procedures needed to meet the January 2025 deadline.

ICT risk management documentation requirements

Financial entities need to maintain a sound, complete, and well-laid-out ICT risk management framework as part of their risk management system. This framework should have:

- Strategies, policies, procedures, and ICT protocols that protect information and ICT assets

- Complete documentation of physical components and infrastructures, including premises and data centers

- A full picture of ICT risk management strategies and controls

The framework needs review once every year (or periodically for microenterprises) and after major ICT-related incidents. Financial entities, except microenterprises, must give responsibility for managing ICT risk to a control function with enough independence. The ICT risk management framework needs regular internal audits by qualified auditors who know ICT risk.

Incident classification and reporting procedures

DORA needs a structured way to classify and report incidents based on specific criteria. Financial entities must classify ICT-related incidents by:

- Number of clients, financial counterparts and transactions affected

- Duration and service downtime

- Geographical spread

- Data losses (including successful malicious unauthorized access)

- Critical services affected

- Economic impact

Financial firms must report major incidents on this timeline:

- Original notification: Within 4 hours after classification

- Intermediate report: Within 72 hours

- Final report: After root cause analysis completion (within one month)

Organizations should know that "critical services affected" stands as a mandatory condition to classify an incident as major. Data loss happens automatically when malicious unauthorized access to network and information systems succeeds, whatever the data exploitation status.

Testing protocols and documentation

DORA requires a complete testing program with various security assessments. Financial entities must run vulnerability scans, network security assessments, open source analyzes, physical security reviews, and security questionnaires.

Organizations other than microenterprises must test all ICT systems and applications that support critical functions yearly. Threat-led penetration testing (TLPT) needs:

- Testing on live production systems

- Testing every three years (depending on risk portfolio)

- Submission of findings, corrective action plans, and compliance documentation

Financial entities must set up validation methods to check if all identified weaknesses get fixed. The testing framework should show ways to prioritize, classify, and fix issues found during assessments.

Third-party contract review process

Financial firms must review their ICT third-party service providers' contracts to ensure DORA compliance. Key contract provisions must have:

- Clear security requirements and measures

- Incident reporting obligations and timelines

- Review capabilities for security practices

- Business continuity arrangements

Financial entities should identify and document all ICT services and define their "critical and important" functions. High-risk providers' contracts need more frequent reviews.

Organizations can streamline contract reviews by doing complete reviews of current agreements with clause updates or adding a "DORA Addendum" that overrides the main agreement. Financial entities stay fully responsible for compliance even when using outsourced ICT services.

Implementing Effective ICT Risk Management

ICT risk management is the foundation of DORA compliance. It needs practical steps instead of theoretical frameworks. Financial entities should turn regulatory requirements into operational processes that boost their digital resilience against potential threats.

Asset inventory and classification

ICT risk management starts with detailed identification and classification of all digital assets. Under DORA, financial entities must "identify, classify and adequately document all ICT supported business functions, roles and responsibilities". The inventory should have:

- All information assets and ICT systems, including remote sites and network resources

- Hardware equipment and critical infrastructure components

- Configurations and interdependencies between different assets

These inventories need updates when major changes happen. Financial entities should identify and document processes that depend on ICT third-party service providers, especially those that support critical functions.

Risk assessment methodology

After proper asset cataloging, financial institutions must "continuously identify all sources of ICT risk". DORA requires a systematic approach. Entities should review risk scenarios yearly that could affect their operations.

The assessment process evaluates:

- Risks from interconnections with other financial entities

- Vulnerabilities in the organization's digital infrastructure

- Potential effects on critical business functions

All but one of these microenterprises need risk assessments "upon each major change in the network and information system infrastructure". The same applies before and after connecting new technologies or applications.

Security controls implementation

DORA requires financial entities to "minimize the impact of ICT risk by deploying appropriate strategies, policies, procedures, ICT protocols and tools". This means implementing:

- Information security policies that define rules to protect data confidentiality, integrity and availability

- Network and infrastructure management with appropriate techniques and isolation mechanisms

- Access control policies that limit physical and logical access to necessary levels

- Strong authentication mechanisms and cryptographic protections based on risk assessment results

Among other technical controls, entities should create "documented policies, procedures and controls for ICT change management". This ensures all system modifications follow controlled processes.

Continuous monitoring approach

The final component needs constant watchfulness over ICT systems. Financial entities must "continuously monitor and control the security and functioning of ICT systems and tools". This helps detect potential issues before they become incidents.

Effective monitoring needs automated tools that track system activity and generate alerts for suspicious behavior. Organizations should implement Security Information and Event Management (SIEM) solutions. These provide live visibility into risk metrics, control performance, and system health.

Financial institutions can build resilient ICT risk management programs by doing this and being systematic. This approach meets DORA requirements and strengthens operational resilience.

DORA-Compliant Incident Response Planning

A resilient incident response framework serves as a key regulatory requirement under DORA. Financial firms need well-laid-out processes to classify, report, and learn from ICT-related incidents before January 2025.

Incident classification framework

DORA requires classification of ICT-related incidents based on seven criteria: number of clients affected, effect on reputation, duration and service downtime, geographical spread, data losses, critical services affected, and economic impact. An incident becomes "major" when it affects critical services and hits specific materiality thresholds. The European Supervisory Authorities state that "critical services affected" must be present to call an incident major. On top of that, any successful malicious unauthorized access to network systems automatically triggers the "data loss" criterion, whatever the data exploitation status.

Reporting timelines and requirements

Major incidents require financial entities to meet strict reporting deadlines:

- Original notification: Within 4 hours after classification (no later than 24 hours after detection)

- Intermediate report: Within 72 hours of the original notification

- Final report: No later than one month after the intermediate report

Most financial entities can submit reports by noon the next working day if deadlines fall on weekends or holidays. This flexibility doesn't apply to credit institutions, central counterparties, trading venues, and entities identified as essential or important.

Root cause analysis methodology

Article 17 of DORA requires financial entities to "set up proper procedures and processes to ensure consistent and integrated monitoring, handling and follow-up of ICT-related incidents, to ensure that root causes are identified, documented and addressed". This analysis must look into what caused the disruption and identify improvements needed in ICT operations or business continuity policy.

Post-incident review process

Post-incident reviews need to check if teams followed established procedures and if actions worked. The review must get into:

- Speed of alert response and impact determination

- Quality and speed of forensic analysis

- Internal escalation effectiveness

- Internal and external communication effectiveness

Financial firms must then use these lessons in their ICT risk assessment process to improve their digital operational resilience strategy.

Third-Party Risk Management Under DORA

DORA compliance frameworks put vendor relationship management at their core. Financial entities must tackle their digital supply chain risks with well-laid-out approaches throughout the third-party lifecycle.

Critical service provider identification

Financial entities need to determine which ICT service providers support their critical business functions. Service disruptions could materially hurt financial performance, service continuity, or regulatory compliance. The identification process maps all contractual arrangements with ICT vendors. It clearly distinguishes between providers that support critical versus non-critical functions. The assessments must evaluate what disruptions mean for the system, how much they rely on providers, and challenges in replacing them.

Contract requirements and negotiation strategies

DORA mandates detailed contractual provisions after critical providers are identified. ICT service agreements must cover security requirements, data protection, service levels, and business continuity arrangements. Contracts that support critical functions need additional provisions. These include incident support at preset costs and participation in security awareness programs. Financial entities don't need to completely rewrite agreements. They can review end-to-end with clause updates or add a "DORA Addendum" that takes precedence over the main agreement.

Ongoing monitoring and assessment

Constant watchfulness matters throughout vendor relationships. DORA requires regular evaluation through performance indicators, control metrics, audits, and independent reviews. Financial entities must track their vendor ecosystem's data confidentiality, availability, integrity, and authenticity. This monitoring should spot problems and trigger fixes within set timeframes.

Exit strategy planning

DORA requires detailed exit strategies for critical service providers above all else. These plans must handle persistent service interruptions, failed delivery, or unexpected contract endings. Exit strategies should enable smooth transitions. Business activities, regulatory compliance, and client service quality must not suffer. Recent surveys show a major compliance gap before the 2025 deadline. Only 20% of financial professionals say they have proper stressed exit plans ready.

Conclusion

Financial institutions are facing new challenges with DORA's January 2025 deadline on the horizon. This detailed regulation just needs proper preparation in five key areas: ICT risk management, incident reporting, resilience testing, third-party oversight, and information sharing.

Organizations need to implement resilient frameworks to succeed. They must create detailed asset lists, develop response procedures, assess risks fully, and keep thorough records. Third-party relationships require extra focus with careful provider reviews, contract evaluations, and backup plans.

The clock is ticking. Financial entities should start their gap analysis now, assign the right resources, and get stakeholders involved at every level. Regular checks will keep compliance measures working and ready for new threats.

DORA goes beyond just following rules - it creates the foundation for lasting operational strength in today's digital financial world. Companies that embrace these requirements can better shield their operations, help clients, and keep European financial markets stable.

Financial institutions can turn these regulatory requirements into real operational advantages by preparing carefully and implementing DORA's guidelines systematically. This approach ensures their continued success as the digital environment evolves.

DORA compliance represents a shift from conventional risk management approaches. The regulation acknowledges that ICT incidents could destabilize the entire financial system, even with proper capital allocation to standard risk categories.

January 17, 2025 marks a pivotal moment that will change how 21 different types of financial entities handle their digital operations. This detailed framework impacts banks, insurance companies, and investment firms. It introduces strict requirements for ICT risk management and operational resilience.

DORA compliance represents a shift from conventional risk management approaches. The regulation acknowledges that ICT incidents could destabilize the entire financial system, even with proper capital allocation to standard risk categories. Financial entities must prepare for sweeping changes. They need to maintain detailed ICT third-party service provider registers by January 2025. Their first Registers of Information must be submitted by April 2025.

This piece gets into the hidden technical requirements of EU DORA. It breaks down the complex framework to give you useful insights. You'll learn about everything from mandatory infrastructure specifications to advanced testing methods. This knowledge will help your organization implement the necessary changes before the regulation takes effect.

DORA Regulation Framework: Beyond Surface-Level Compliance

The EU's Digital Operational Resilience Act creates a single legal framework that changes how financial entities manage ICT risks. DORA became active on January 16, 2023. Financial organizations must meet its technical requirements by the set deadline.

Key Dates and Enforcement Timeline for EU DORA

The DORA implementation roadmap includes these important dates:

- January 16, 2023: DORA became active

- January 17, 2024: First set of regulatory technical standards arrived

- July 17, 2024: Second set of policy standards and Delegated Act on Oversight will be ready

- January 17, 2025: DORA applies to all entities within its scope

- April 2025: Financial entities need to submit details about their critical ICT service providers

- July 2025: European Supervisory Authorities (ESAs) will finish their assessments and let critical ICT third-party service providers know their status

Financial institutions have about two years from DORA's start date to meet its requirements. The second set of standards comes out in July 2024, leaving organizations just six months to get everything ready before January 2025.

Scope of Financial Entities Under DORA Regulation

DORA applies to more than 22,000 entities across the EU. Article 2 lists 20 types of financial entities that must comply:

- Credit institutions and payment institutions

- Electronic money institutions

- Investment firms and trading venues

- Crypto-asset service providers and issuers of asset-referenced tokens

- Insurance and reinsurance undertakings

- Central securities depositories and central counterparties

- Trade repositories and securitization repositories

- Credit rating agencies

- Data reporting service providers

- Crowdfunding service providers

- Asset managers and pension funds

The regulation also covers ICT third-party providers working with these financial entities, especially those labeled as "critical" (CTPPs). ESAs decide who gets this label based on their importance to the system, how much others depend on them, and whether they can be replaced easily.

DORA takes a balanced approach. Article 4 says implementation should match each entity's size, risk profile, and business model. This means the rules will affect organizations differently based on their current ICT risk management practices.

How DORA Is Different from Previous ICT Regulations

DORA changes ICT regulatory frameworks in several important ways:

Single Standard: DORA replaces the scattered rules for ICT resilience across EU countries. Now financial entities follow one consistent standard instead of different national requirements.

ICT-Specific Focus: Traditional frameworks mostly looked at capital allocation. DORA recognizes that ICT problems can threaten financial stability even with good capital reserves. The rules target digital threat resilience rather than just financial safety nets.

Broader Oversight: DORA lets regulators directly supervise critical ICT third-party providers. This creates a complete system for monitoring financial services technology. European Supervisory Authorities (EBA, ESMA, EIOPA) lead the oversight of CTPPs across Europe.

New Technical Rules: DORA requires several specific measures:

- Risk management systems to find, assess and handle ICT-related risks

- Systems to detect, report and respond to incidents quickly

- Digital operational resilience testing

- Detailed records of ICT third-party service providers

DORA takes priority over both the Network Information Security (NIS) Directive and Critical Entity Resilience (CER) Directive if their rules conflict.

Hidden ICT Governance Requirements in DORA

DORA's technical requirements have a hidden layer of governance rules that financial entities often miss. These rules bring fundamental changes to ICT governance structures. Financial entities should pay attention to these changes well before January 2025.

Mandatory ICT Role Assignments and Reporting Lines

DORA requires specific organizational structures with clear ICT risk management responsibilities. Financial entities must assign a dedicated control function to oversee ICT risk. This control function must manage to keep "an appropriate level of independence" to avoid conflicts of interest in technology risk management.

DORA requires financial entities to use a strict "three lines of defense" model or similar internal risk framework. The model works like this:

- First line (operational functions): Day-to-day ICT risk management

- Second line (risk management functions): Independent oversight and monitoring

- Third line (internal audit): Independent assurance activities

Financial entities must set up clear reporting channels to make notifications easier to the management body about major ICT incidents and third-party arrangement changes. DORA also says financial entities should either have someone watch over ICT service provider arrangements or let a senior manager track risk exposure and documentation.

Documentation Standards for ICT Risk Management

DORA sets out detailed documentation rules that need regular updates and reviews. Teams should review the ICT risk management framework really well at least once a year. More reviews happen after big ICT incidents or when supervisors find issues. A formal report must be ready at the time authorities ask for it.

The rules say financial entities must create and keep documented policies, standards, and procedures for ICT risk identification, monitoring, reduction, and reporting. Regular reviews ensure these documents work, and teams must keep evidence like board minutes and audit reports for compliance.

DORA says entities must create a complete digital operational resilience strategy as part of their ICT risk framework documents. This strategy shows how to implement everything and might include an "integrated ICT multi-vendor strategy" that shows service provider dependencies and explains buying decisions.

Board-Level Technical Knowledge Requirements

DORA puts the ultimate responsibility for ICT risk management on the management body (board of directors). Article 5 states that the board has "ultimate responsibility" for the entity's ICT risk management. No individual, group, or third party can take over this responsibility.

The board's ICT duties include:

- Defining and approving the entity's DORA strategy

- Setting up proper governance arrangements with clear roles

- Watching over ICT business continuity arrangements

- Reviewing and approving ICT audit arrangements

- Making sure there are enough resources for DORA compliance

- Approving and checking third-party ICT arrangements regularly

DORA requires board members to know enough about ICT risks to do their job. Regulators will think about "sufficient knowledge and skills in ICT risks" when deciding if board members are suitable. This marks a big change as technical skills become a must-have rather than just nice-to-have.

Board members just need to understand simple technical parts of ICT security, why resilience matters, specific ICT risks their organization faces, and how to reduce these risks. DORA makes ICT security training mandatory for everyone, including board members.

Technical Infrastructure Specifications for DORA Compliance

DORA's technical infrastructure requirements outline the architectural and system specifications that financial entities need to implement by January 2025. These specifications are the foundations of operational resilience that go way beyond the reach and influence of policy frameworks into real system implementations.

Network Segmentation and Architecture Requirements

DORA regulation needs sophisticated network segmentation to isolate affected components right away during cyber incidents. Financial entities should design their network infrastructure so teams can "instantaneously sever" connections to stop problems from spreading, especially in interconnected financial processes. This requirement protects financial systems from widespread failures.

DORA lists these technical parameters for network architecture:

- Segregation and segmentation of ICT systems based on how critical they are, their classification, and the overall risk profile of assets using those systems

- Detailed documentation of network connections and data flows throughout the organization

- Dedicated administration networks kept separate from operational systems to boost security

- Network access controls that block unauthorized devices or endpoints that don't meet security requirements

- Encrypted network connections for data moving through corporate, public, domestic, third-party, and wireless networks

Financial entities should review their network architecture and security design yearly. More frequent reviews become necessary after big changes or incidents. Organizations that support critical functions must check their firewall rules and connection filters every six months.

System Redundancy Technical Specifications

DORA requires financial entities to keep redundant ICT capacities with enough resources and capabilities to keep business running. This goes beyond simple backup systems into reliable resilience architecture.

Central securities depositories must have a secondary processing site with these technical features:

- A location far enough from the main site to have different risk exposure

- The ability to keep critical functions running just like the main site

- Quick access for staff to maintain service when the main site goes down

The rules also say financial entities need both primary and backup communication systems. This dual setup lets organizations keep talking even during cyber incidents through separate communication systems on independent networks.

Small businesses can decide what redundant systems they need based on their risk profile, but they still need some backup plans.

Data Backup Technical Parameters

DORA sets strict rules for data backup and restoration. Financial entities must create backup policies that spell out:

- What data needs backing up

- How often backups should happen based on data's importance and privacy needs

- Step-by-step restoration procedures with proven methods

Organizations must set up backup systems they can start quickly using documented steps. When restoring data, they need ICT systems physically and logically separate from source systems to avoid contamination.

DORA says recovery must be secure and quick. Financial entities must test their backup procedures regularly to make sure they work. These tests help build confidence that data protection will work when needed.

Organizations must look at how critical each function is and how it might affect market efficiency when setting recovery goals. After recovery, teams must run multiple checks to ensure data stays intact.

These technical rules work together to give financial entities reliable protection against ICT problems, which helps keep the EU financial sector's digital operations strong.

Advanced Testing Requirements Under DORA Framework

DORA regulation brings in strict advanced testing protocols that financial firms just need to put in place to keep their digital systems reliable. These rules go way beyond regular security testing. They just need detailed assessment methods to confirm ICT systems can handle sophisticated threats.

TLPT Technical Specifications and Methodologies

Threat-Led Penetration Testing (TLPT) is the life-blood of DORA's advanced testing requirements. It's now a must for designated financial entities. Article 26 says financial firms should run TLPT at least once every three years. This testing framework builds on the existing TIBER-EU model with several vital changes.

TLPT methods work in three main phases:

- Preparation phase: Setting the scope, building control teams, and picking testers

- Testing phase: Covers threat intelligence, red team test planning, and active testing

- Closure phase: Includes purple teaming exercises and plans to fix issues

The active testing phase should run at least 12 weeks to copy what sneaky threat actors might do. Financial entities should spot all their key ICT systems that support critical functions. They need to figure out which critical functions should get TLPT coverage. After that, designated authorities should confirm the exact TLPT scope.

Red teams must have certified testers with specific qualifications. Each team needs a manager with five years of experience and at least two more testers. These additional testers should have two years of experience in penetration testing.

Testing Environment Isolation Requirements

DORA says you must keep ICT production and testing environments separate. This separation helps prevent unauthorized access, changes, or data loss in production environments.

Sometimes, financial entities can test in production environments if they:

- Show proof why such testing is needed

- Get the right approvals from admin

- Set up better risk controls during testing

For TLPT, financial entities should put reliable risk management controls in place. These controls help reduce potential risks to data, damage to assets, and disruption to critical functions. On top of that, firms should make sure ICT third-party service providers join the TLPT when their services are part of the testing scope.

Vulnerability Scanning Technical Parameters

DORA sets specific rules for vulnerability scanning too. Financial entities should create and use vulnerability management procedures that include:

- Weekly automated vulnerability scans on ICT assets that support critical functions

- Keeping track of third-party libraries, including open-source parts

- Ways to tell clients, counterparties, and the public about vulnerabilities

- Quick fixes and other measures based on priority

Financial entities should think about how critical the ICT assets are when fixing vulnerabilities. Their patch management should include emergency processes for updates and clear deadlines for installation.

Testing Documentation and Evidence Standards

Detailed documentation is key to DORA's testing framework. For TLPT, financial entities should provide:

- A summary of what they found after testing

- Detailed plans to fix any vulnerabilities they found

- Proof that TLPT follows all regulatory requirements

Authorities will review and provide an attestation to confirm the test met all requirements. This helps other authorities recognize the TLPT results. The attestation proves compliance during regulatory checks.

For vulnerability scanning, financial entities should record every vulnerability they find and track how it gets fixed. They should also document all patch management fully, including the automated tools they use to spot software and hardware patches.

These advanced testing requirements help DORA create a standard way to confirm security across European financial firms. This ensures reliable operational resilience against new digital threats.

ICT Third-Party Risk Technical Management

Chapter V of the EU DORA regulation sets technical requirements for managing ICT third-party risks. Financial entities must implement sophisticated control measures that go beyond regular vendor management practices.

Technical Due Diligence Requirements for Service Providers

Financial entities must assess ICT third-party service providers before signing any contracts. The pre-contractual phase requires verification of several key aspects. Service providers need to show:

- They have enough skills, expertise, and the right financial, human, and technical resources

- Their information security standards and organizational structure meet requirements

- They follow good risk management practices and internal controls

The assessment should show if providers use proven risk mitigation and business continuity measures. Companies can gather evidence through independent audits, third-party certifications, or internal audit reports from the ICT service provider.

API and Integration Security Standards

API security plays a crucial role in third-party risk management under DORA. Companies must find, assess risks, and secure every API that connects to enterprise data. They also need monitoring systems to check if API interactions stay secure throughout the relationship.

APIs often work as access points for vendors into core banking systems. DORA requires regular testing to find weaknesses in API endpoints. Organizations must find ways to detect shadow APIs - these hidden or forgotten endpoints could create security risks.

Exit Strategy Technical Requirements

Each contract with ICT service providers needs an exit strategy. These plans should be realistic and based on likely scenarios. The plans must cover:

- Unexpected service disruptions

- Cases where service delivery fails

- Sudden contract terminations

Teams should review and test exit plans regularly to make sure they work. The implementation schedules should match the exit and termination terms in contracts.

Subcontractor Chain Technical Oversight

DORA brings new rules for watching over subcontractors because many critical functions rely on complex supply chains. Financial companies must know every ICT subcontractor supporting critical functions. The financial entity still holds full responsibility for managing risks.

Contracts must clearly state if critical functions can be subcontracted. They should also specify when providers need to notify about major subcontracting changes. Financial entities can object to proposed changes that exceed their risk comfort level.

The regulation gives financial entities the right to end contracts if ICT providers make major subcontracting changes without approval or despite objections.

Incident Reporting Technical Infrastructure

DORA requires financial entities to set up advanced systems that detect, classify and report major ICT-related incidents. These requirements set new standards for operational resilience in EU's financial sector.

Real-time Monitoring System Requirements

Financial entities must deploy immediate monitoring capabilities to detect unusual activities quickly under DORA rules. They need systems to monitor, manage, log and classify ICT-related incidents. Organizations should collect and analyze data from different sources to ensure early detection. They must also assign specific roles and duties for their monitoring functions.

Each financial entity should create an ICT-related incident policy that covers every step of incident management. The policy needs clear triggers for detecting and responding to ICT-related incidents. The firms should keep incident evidence to help with later analysis, especially for incidents that could have serious effects or happen again.

Automated Classification System Specifications

Financial entities must use automated systems with clear thresholds to classify incidents under DORA. The rules define technical standards that determine which "major ICT-related incidents" must be reported. These classification systems should follow the criteria in Commission Delegated Regulation 2024/1772, which sets severity thresholds.

The automated systems should help maintain consistent classification across incident types. The European Supervisory Authorities have made reporting simpler by reducing the fields needed in the first notifications.

Secure Reporting Channel Technical Standards

Financial entities must create secure channels to report incidents under DORA. They should report within 4 hours after classification and 24 hours after detection. The rules require intermediate reports within 72 hours and final reports within a month.

Organizations need secure communication systems that work with standard reporting templates. The Reporting ITS provides specific templates and methods for secure channel reporting. Weekend reporting now focuses mainly on credit institutions, trading venues, central counterparties, and entities that could affect the whole system.

Conclusion

DORA represents a defining moment for the EU financial sector's digital resilience. Financial organizations must upgrade their technical setup, testing methods and management structure by January 2025. These changes will help them meet strict compliance rules.

The rules specify exact technical steps in different areas. Teams need to focus on network division, backup systems and advanced testing methods. Board members now have bigger roles. They must understand technical aspects to manage ICT risks properly.

Managing third-party relationships is vital under DORA. Financial firms must set up strong technical reviews, secure API connections and detailed exit plans for ICT providers. The technical rules also include immediate monitoring systems and automated ways to sort incidents. These systems help keep operations running smoothly.

Companies should start making these changes now to succeed with DORA. This early start lets financial firms adjust their systems, methods and paperwork to match DORA's detailed framework before enforcement begins.

Google Agentspace has become a revolutionary force in enterprise AI adoption, with major companies like Wells Fargo, KPMG, and Banco BV leading the way.

Google Agentspace has become a revolutionary force in enterprise AI adoption, with major companies like Wells Fargo, KPMG, and Banco BV leading the way. The platform combines powerful AI agents, enterprise search capabilities, and company data into one solution that runs on Gemini's advanced reasoning capabilities.

Companies of all sizes now utilize Agentspace to boost employee productivity through its no-code Agent Designer. Teams can create custom AI agents regardless of their technical expertise. The platform provides solutions for sales, marketing, HR, and software development teams. Pricing begins at $9 monthly per user for NotebookLM for Enterprise and goes up to $45 for the Enterprise Plus tier with additional features.

This piece will show you how Google Agentspace works. You'll learn about its core features and practical strategies to discover the full potential of AI transformation for your organization.

What is Google Agentspace: Core Components and Architecture

Google Agentspace marks a major step forward in enterprise AI technology. It combines Gemini's reasoning capabilities with Google-quality search and enterprise data access. The platform connects employees with AI agents smoothly, whatever the location of company data.

Gemini-powered AI foundation for enterprise search

Google Agentspace builds on Gemini, Google's advanced AI model that gives the platform its intelligence and reasoning abilities. This combination helps Agentspace provide conversational support, tackle complex questions, suggest solutions, and take action based on each company's information.

The platform turns scattered enterprise content into practical knowledge. It builds a complete enterprise knowledge graph for each customer that links employees to their teams, documents, software, and available data. This smart connection system understands context in ways that are nowhere near what traditional keyword search can do.

Google Agentspace works through three main tiers:

- NotebookLM Enterprise: The foundation layer that enables complex information synthesis

- Agentspace Enterprise: The core search and discovery layer across enterprise data

- Agentspace Enterprise Plus: The advanced layer for custom AI agent deployment

Each tier adds to the previous one and creates an ecosystem where information flows naturally. The platform's security runs on Google Cloud's secure-by-design infrastructure. It has role-based access control (RBAC), VPC Service Controls, and IAM integration to protect data and ensure compliance.

NotebookLM Plus integration for document synthesis

NotebookLM Plus is a vital part of the Agentspace architecture that offers advanced document analysis and synthesis tools. Google started with NotebookLM as a personal research and writing tool. Now they've expanded its features for business use through NotebookLM Plus and Enterprise editions.

NotebookLM Enterprise lets employees upload information for synthesis, find insights, and work with data in new ways. Users can create podcast-like audio summaries from complex documents. The system supports more file types than the consumer version, including DOCX and PPTX. Users also get higher limits for notebooks, sources, and queries.

NotebookLM Enterprise runs in the customer's Google Cloud environment. This setup keeps all data within the customer's Google Cloud project and prevents external sharing. System administrators can manage it through the Google Cloud console. Users access notebooks through project-specific URLs and use preset IAM roles for access control.

Google has started rolling out an experimental version of Gemini 2.0 Flash in NotebookLM. This update will likely make the system faster and more capable within the Agentspace ecosystem.

Multimodal search capabilities across enterprise data

Google Agentspace stands out because of its multimodal search features that work with many types of data and storage systems. The platform understands text, images, charts, infographics, video, and audio. It finds relevant information in any format or storage location.

The multimodal search feature provides one company-branded search agent that acts as a central source of truth. It runs on Google's search technology and uses AI to understand what users want and find the most relevant information. The system works with both unstructured data like documents and emails, and structured data in tables.

The architecture has ready-made connectors for popular third-party apps that work smoothly with:

- Confluence

- Google Drive

- Jira

- Microsoft SharePoint

- ServiceNow

- Salesforce

- And more

This connection system helps employees access and search relevant data sources without switching apps. Agentspace works as a smart layer on top of existing enterprise systems instead of replacing them.

A recent upgrade integrates Agentspace's unified enterprise search directly into the Chrome search bar. Employees can now use the platform's search, analysis, and synthesis features without leaving their main work environment.

This well-designed architecture makes Google Agentspace a complete package. It combines Gemini's AI capabilities with enterprise data access and specialized agent features in a secure, adaptable framework built for business needs.

Building Custom AI Agents with No-Code Agent Designer

The game-changing feature of Google Agentspace makes it easier to create AI agents. The new no-code Agent Designer helps employees with any technical skill level build customized AI assistants. They can do this without writing code.

Step-by-step agent creation process

Anyone can learn to create a custom AI agent in Google Agentspace through a simple process. The first step opens the Agent Designer within the Agentspace platform. You then describe what you want your agent to do. The system takes this natural language input and sets up the agent's main functions and capabilities.

The next crucial step lets you choose which data sources your agent should access. This choice determines what information your agent can find and use during interactions. You then define specific actions your agent can perform, like searching documents, creating summaries, or linking to other enterprise systems.

Google offers the Vertex AI Agent Development Kit as another option for advanced users. This developer-focused tool has a growing library of connectors, triggers, and access controls. Developers can build complex agents and publish them directly to Agentspace.

Template selection and customization options

Google Agentspace offers various templates as starting points for different use cases. These templates help different departments:

- Business analysts create agents that find industry trends and generate data-driven presentations

- HR teams build agents that streamline employee onboarding

- Software engineers develop agents that spot and fix bugs proactively

- Marketing teams make agents for performance analysis and campaign optimization

The platform goes beyond basic templates. The Agent Designer's easy-to-use interface lets users adjust how agents work with enterprise data sources. You can customize how search results appear, add summaries, and create follow-up prompts.

Testing and refining agent performance

Testing becomes crucial before deployment once you set up your agent. The Agent Designer has built-in testing tools that let you simulate user interactions. This ensures your agent responds well to different inputs.

Key testing areas include:

- Accuracy of information retrieval

- Relevance of responses

- Proper connection to data sources

- Appropriate action execution

The platform lets you make conversational adjustments when issues arise. You can guide the agent to improve itself based on feedback. This continuous improvement process helps your agent get better through ground usage and feedback.

Deployment strategies for enterprise-wide adoption

The next challenge comes after you perfect your custom agent - rolling it out across your organization. Google Agentspace solves this with the Agent Gallery. This central hub helps employees discover all available agents in your enterprise.

The Agent Gallery works with an allowlist and shows employees agents from multiple sources:

- Custom agents built by internal teams

- Google's pre-built agents

- Partner-developed agents

- Agents from external platforms

This united approach breaks down traditional enterprise tool barriers. The platform stands out by working with agents from external platforms like Salesforce Agentforce and Microsoft Copilot. This creates a seamless experience.

Smart deployment targets specific teams that benefit most from particular agents. Linking agents to relevant team data sources keeps adoption rates high. Employees see immediate value from AI assistance that fits their context.

The Agent Designer transforms how enterprises implement AI. It moves from developer-focused to user-focused creation while keeping options open for complex technical solutions when needed.

Enterprise Search Implementation with Google Agentspace

Setting up Google Agentspace needs proper planning and setup to maximize its potential. Traditional search systems only work with keywords. However, Agentspace understands and searches across text, images, charts, videos, and audio files.

Setting up company-branded search experiences

You need to create a company-branded search experience as your organization's central source of truth. Start by opening your Google Cloud console and search for "agent builder." The API needs to be enabled if you're using it for the first time. Next, click "apps" from the left panel and select "create a new app." Choose the "enterprise search and assistant" option which is in preview mode.

This setup creates a search agent customized to your company's brand identity. Your employees can access this unified search through a web link. They can ask questions, see search suggestions, and create documents from one interface. Google has integrated Agentspace's search features directly into Chrome Enterprise. This allows employees to use these capabilities from their browser's search box.

Configuring data source connections

Google Agentspace Enterprise's strength comes from connecting to different data sources. Here's how to set up these connections:

- Click on "data sources" from the left panel in the Google Cloud console

- Select "create data store" and choose from available connectors

- Configure authentication for your selected data source

- Define synchronization settings (one-time or periodic)

Agentspace has ready-made connectors for many applications including:

- Document management: Google Drive, Box, Microsoft SharePoint

- Collaboration tools: Slack, Confluence, Teams

- Project management: Jira Cloud

- Customer data: Salesforce

- IT service management: ServiceNow

Managing access controls is vital during configuration. Agentspace follows the source application's access control lists (ACLs). This means indexed data keeps the original system's permissions. Your employees will only see results for content they can access. You won't need to create custom permission rules.

Implementing RAG for improved search accuracy

Retrieval Augmented Generation (RAG) makes Google Agentspace's search more accurate. Enable document chunking when you create your search data store to implement RAG well. This breaks documents into smaller, meaningful parts during ingestion. The result is better relevance and less work for language models.

The layout parser in your document processing settings should be configured for the best RAG setup. This parser spots document elements like headings, lists, and tables. It enables content-aware chunking that keeps meaning intact. You can choose which file types should use layout parsing. This works great for HTML, PDF, or DOCX files with complex structures.

Agentspace gives you three parsing choices: digital parser for machine-readable text, OCR parsing for scanned PDFs, and layout parser for structured documents. The layout parser stands out because it recognizes content elements and structure hierarchy. This improves both search relevance and answer quality.

Search analytics and continuous improvement

Google Agentspace provides powerful analytics tools in the Google Cloud console after implementation. These tools help you learn about search performance, query patterns, and how users interact with the system. Administrators can spot areas that need improvement.

Users can rate search results and generated answers in real-time. This feedback helps the system get better based on actual use. You can also see analytics by query types, data sources, and user groups to find specific areas to improve.

Look at search analytics often to find common queries with low satisfaction rates. Check which data sources users access most and keep them properly synced. You can adjust boosting and burying rules to improve how content appears in search results based on relevance.

These implementation steps help organizations build a powerful enterprise search system. It keeps getting better while maintaining strict access controls and data security.

Integrating Google Agentspace with Enterprise Systems

Uninterrupted connection between Google Agentspace and enterprise infrastructure creates real business value. The platform turns scattered data into applicable information by connecting information silos through powerful integrations without disrupting existing workflows.

Connecting to Google Workspace applications

Google Agentspace integrates deeply with Google Workspace applications to create a unified ecosystem where information moves freely between tools. The Workspace integration lets Agentspace draft Gmail responses, provide email thread summaries, and schedule meetings by checking Google Calendar availability automatically.

Google Drive integration significantly improves document management. Employees can search their organization's entire document library instantly after connecting Agentspace to Drive. The system maintains existing sharing permissions, so users see only the documents they have authorization to access.

The true value of these integrations shows when multiple Workspace applications work together. An employee asking about quarterly sales projections gets data from Drive spreadsheets, relevant Calendar events, and Gmail conversation context—all in one response.

Third-party integrations with Salesforce, Microsoft, and more

Agentspace connects to many third-party applications through dedicated connectors, which eliminates switching between different systems. Document management expands to Box and Microsoft SharePoint, where teams can search, create reports, and get AI-powered summaries of long documents.

Microsoft users get complete integration with:

- Outlook email and calendar for communication management

- SharePoint Online for document access and search

- Teams for collaboration content

Salesforce integration helps sales and customer service teams manage leads, update CRM records, and discover AI-powered sales insights. IT and engineering teams can utilize Jira, Confluence, GitHub, and ServiceNow connections to track tickets and manage documentation better.

Agentspace excels by incorporating agents built on external platforms. Teams can upload, access, and deploy Salesforce Agentforce or Microsoft Copilot agents directly in their Agentspace environment—this shows Google's dedication to interoperability.

API connectivity options for custom applications

Agentspace offers flexible API connectivity options for organizations with special needs. The platform connects to Dialogflow agents to create custom conversational experiences beyond standard features. These agents work as deterministic, fully generative, or hybrid solutions and connect to any service.

Custom agent connections help enterprises build sophisticated workflows for specific business tasks. A financial institution could create agents that handle fraud disputes, process refunds, manage lost credit cards, or update user records while maintaining security controls.

Google added support for the open Agent2Agent (A2A) Protocol. This breakthrough lets developers pick their preferred tools and frameworks while staying compatible with the broader Agentspace environment.

Agentspace maintains strict security protocols across all integration options. The platform follows source application access controls, manages role-based access, and guarantees data residency—keeping sensitive information safe as it moves between systems.

Real-World Applications Across Business Departments

Companies that use Google Agentspace see clear benefits in their departments. Their teams make better decisions and get more work done.

Marketing team use cases and ROI metrics

Marketing teams use Google Agentspace to create content that matches their brand voice. They also get evidence-based insights about their campaigns. Teams can now create individual-specific messages, product suggestions, and deals based on customer information. At Accenture, AI agents have made a major retailer's customer support better by adding self-service options that improve customer experience. Some other ways teams use it:

- Creating quality blogs and social posts that match brand tone

- Making audio summaries to speed up market research

- Finding content gaps through AI analysis of feedback

Capgemini has built AI agents with Google Cloud. These agents help retailers take orders through new channels and speed up their order-to-cash process.

HR department implementation examples

HR teams have simplified their administrative work with custom Agentspace agents. These agents answer employee questions about benefits, pay, and HR rules. This lets HR staff focus on more important work. AI helps match the right talent to specific projects.

HR departments use Agentspace in several ways. They help new employees settle in, create surveys to find areas of improvement, and give staff easy access to company policies. Wagestream, a financial wellbeing platform, handles over 80% of internal customer inquiries with Gemini models.

IT and development team efficiency gains

Software teams use Google Agentspace to find and fix bugs faster, which speeds up product releases. Developers check code quality, find existing solutions, and spot potential problems early.

Cognizant created an AI agent with Vertex AI and Gemini that helps legal teams write contracts. It assigns risk scores and suggests ways to improve operations. Multimodal, part of Google for Startups Cloud AI Accelerator, uses AI agents to handle complex financial tasks. These agents process documents, search databases, and create reports.

Finance and legal compliance applications

Google Agentspace helps finance and legal teams handle compliance better. It monitors regulations and reviews documents automatically. Legal teams can watch regulatory processes without manual work. They run smart compliance checks and work better with business teams.

Finnit, another Google for Startups Cloud AI Accelerator member, offers AI solutions for corporate finance. Their system cuts accounting procedures time by 90% and improves accuracy. Legal departments can now work on strategic projects instead of processing documents repeatedly.

Google Agentspace Pricing and Deployment Options

Organizations need to understand the costs of google agentspace implementation to select the right tier based on their needs. Google provides three pricing tiers that offer different levels of features and capabilities.

NotebookLM for Enterprise ($9/user/month)

NotebookLM for Enterprise serves as the entry-level option. The tier has:

- A user interface that matches the consumer version

- Basic setup without pre-built connectors

- Support for Google and non-Google identity

- Sec4 compliance certification

- Cloud terms of service protections

NotebookLM Enterprise runs in your Google Cloud project. Your data stays within your environment and cannot be shared externally. This tier works well when we focused on document synthesis and analysis.

Agentspace Enterprise tier ($25/user/month)

The middle tier enhances NotebookLM's capabilities with detailed search features. Users get access to:

- Blended search across enterprise apps

- Document summarization tools

- Source material citations

- People search capabilities

- Search across text, images and other formats

- All NotebookLM Enterprise features

This tier acts as your company's source of truth through its branded multimodal search agent. The higher price brings many more features beyond simple document analysis.

Agentspace Enterprise Plus features ($45/user/month)

The premium tier helps realize the full potential of google agentspace as the most feature-rich option. Key features include:

- Follow-up questions for deeper exploration

- Actions in Google and third-party apps

- Document upload and Q&A interactions

- Tools to create custom automated workflows

- Research agents for gathering detailed information

Organizations can create expert agents at this level to automate business functions across departments like marketing, finance, legal and engineering.

Calculating total cost of ownership

The total cost calculation needs to factor in several elements beyond subscription pricing. Organizations should track:

- Infrastructure costs (CPU, memory, storage, data egress)

- Indirect costs (personnel, software tools, migration)

- Expected growth rates

The formula works by adding [(Cloud infrastructure costs) + (indirect costs) + (migration costs)] × estimated growth × timeframe.

Google Cloud's Migration Center provides tools to generate TCO reports. Teams can export these reports to Google Slides, Sheets, CSV or Excel formats to share with stakeholders.

Conclusion

Google Agentspace is changing how businesses work by combining powerful AI with enterprise search through its innovative architecture. In this piece, we looked at how companies can build custom AI agents, implement enterprise-wide search, and combine their business systems naturally.

The platform offers three pricing tiers that start at $9 per user monthly for NotebookLM Enterprise and go up to $45 for Enterprise Plus. This makes it available to companies of all sizes and needs. Success stories from Wagestream, Finnit, and major retailers show major improvements in efficiency and customer experience across their departments.

Key takeaways from our exploration include:

- Gemini-powered AI foundation enabling sophisticated reasoning and search capabilities

- No-code Agent Designer democratizing AI agent creation across skill levels

- Complete integration options with Google Workspace and third-party applications

- Reliable security measures ensuring data protection and compliance

- Measurable ROI across marketing, HR, IT, and finance departments

Google Agentspace alters the map of how enterprises handle information access and workflow automation. Current adoption trends and continuous platform improvements suggest this technology will become vital for organizations that want to stay competitive in an AI-driven business world.

The comparison between MCP vs A2A has become more relevant as AI Agents transform from futuristic concepts into vital business tools. Google announced the Agent2Agent (A2A) Protocol in April 2025, giving businesses two powerful protocols to choose from.

The comparison between MCP vs A2A has become more relevant as AI Agents transform from futuristic concepts into vital business tools. Google announced the Agent2Agent (A2A) Protocol in April 2025, giving businesses two powerful protocols to choose from.